Blame, Shame, & Systems

As I try to acknowledge and unlearn shame to improve my personal life, I’m also considering how I can do my part to stop perpetuating shame-based practices at work. I’ve been asking myself questions like:

- How might shame be affecting those around me?

- Am I knowingly participating in structures that are based on shame?

- What are some ways shame manifests itself?

Let’s Talk Tech Culture

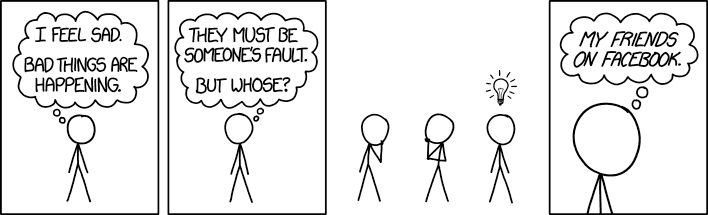

I’m going to go out on a limb here and suggest that when we default to using shame-based structures to solve problems, we spend our troubleshooting and post-incident discussions in what are essentially victim blaming loops. Tech peeps, does this sound familiar to you?

- Discover there was an outage of a thing

- Find last change related to the thing

- Find out who made the last change to the thing

- Tell that person publicly that their change broke the thing and make them fix the thing all alone in the corner of shame

- (BONUS): Take away privileges from that person and reassure stakeholders the issue with the thing will never, ever recur because the problem has been solved

Shame Yields Silence & Inaction

The power of shame is that it has a chilling effect impacting what we do or don’t do. The chilling effect of the shame we’ve learned sometimes manifests as a paralyzing fear. Here are a few examples that might sound familiar:

- Not asserting boundaries for fear of fulfilling stereotypes (like “angry Black woman”)*

- Not asking for help for fear of appearing “needy”

- Not articulating needs for fear of appearing “selfish”

- Not seeking justice for fear of being blamed for or bullied about abuse

- Not admitting mistakes for fear of appearing unintelligent (impostor syndrome anyone?) or being blamed for a failure

From a young age, I was taught that abuse could be my fault and was advised with a laundry list of things to do and not do to avoid it. When I was assaulted at a venue, I was kicked out for demanding something be done about the musician who assaulted me multple times. I was treated as a liability to the venue who was causing a scene, not as a victim seeking justice.

With that and future situations, it took years to realize “that was not okay, that was not my fault, and (maybe most importantly) I did not deserve that.” I hesitated to advocate for myself. I blamed myself for not speaking up or not fighting harder or whatever I convinced myself I could have done to change the outcomes. It wasn’t healthy and it didn’t help me to grow as a person.

Naming & Shaming

Victim blaming is despicable because it shames victims out of coming forward. It makes us believe that we won’t be believed or that instead of being received with support that we will be attacked by those to whom we appeal for assistance. Shame undermines justice.

Ultimately, I think that blame hurts because it’s just another vehicle for shame. When we seek to blame, we seek to find fault. The goal of blaming someone is to weaponize their shame, forcing them to change their behavior to avoid experiencing more shame. In information security, we talk about “naming and shaming” adversaries as a means of deterring them from future malicious activities. Okay, I can get behind that. I can get behind naming and shaming Nazis, too.

So why do we often default to similar approaches when addressing our teammates’ contributions to the systems we build, upgrade, and maintain together? This doesn’t make sense because those use cases are polar opposites. I don’t know who needs to hear this (yes, I do: it’s all of us 🙃), but we should not have adversarial relationships with our teammates! If we desire resilient systems, then we need to start by building cultures that are not formulated around the use of shame as a motivational tool (it’s more of a demotivational tool, right?).

Ask Questions Instead: Moving Beyond Shame-based Structures

To isolate human action as the cause or to start with human action as cause and to not go deeper than that leads one to de-prioritize engineering solutions and over-prioritize behavioral control.

From “People or systems? To blame is human. The fix is to engineer” by Richard Holden, Ph.D (link)

It’s 2020. From an application development perspective, we are fortunate to have a plethora of automation and plumbing tools available to help us continuously lint and compile and parse and test and deploy our way to production without introducing (or reintroducing) known badness into our complex applications and systems. We also have a body of knowledge in the areas of resilience and socio-technical systems upon which to lean.

If low-quality code keeps finding its way into production, then some questions to ask might be:

- What improvements will be made to QA practices for the next delivery cycle?

- Is QA getting the time and attention it deserves?

- How might deploying more frequently affect the number of changes deployed?

Yes, people will sometimes write bad code. People make mistakes and that’s okay. Focus on making those mistakes less dangerous.

How about security awareness and users? Our users are an integral part of our systems. Sometimes we want to blame them for clicking the nasty link, installing the trojaned software, etc. Here are some questions we can ask instead:

- Have we asked for feedback on our security awareness efforts (and incorporated it into future iterations)?

- Have users been (effectively) trained about this particular scenario?

- Have technical controls been implemented to address this attack vector?

Yep, folks will fall for phishing, too. Plan on people making mistakes!

We can learn from these types of failures and make our systems more resilient against them. The most important thing is that we resolve to embrace and learn from all of our failures. We should work toward a culture where we do not allow shame to impede our ability to frankly discuss what went well and what was an epic failure.

We cannot allow shame to undermine transparency while expecting good outcomes to magically happen. That means having hard conversations without ego and without shame in order to make meaningful progress. We must close the loop on our failures. In my opinion, this means that post-incident, there is a concrete list of improvements to the system that either prevent that failure entirely or reduce the risk associated with that type of failure and enhance the capability to detect and recover from it in the future. Resolve to do whatever you did to recover the system better/faster the next time or make it so that failure type is a thing of history.

Sometimes Humans Mess Up

Accountability is important. We can avoid defaulting to blaming people and still hold them to what we expect of them as teammates. I think it is possible to bring attention to failing to be accountable as long as these expectations are defined, agreed upon, and well-understood by everyone involved. If we operate within a culture of safety that supports and encourages these discussions, then we should be able to handle these types of situations gently and without structures based on shame. Here are some questions to ask:

- What happened this day/week/month to prevent us from completing this function?

- Do we need to rethink how we are assigning or breaking down work?

- What can we do as a team to prevent this in the future?

- Would it help to devote an hour as a team to complete this function while deadlines are tight/teammate is sick/etc.?

Note: Metrics help.

One thing each of us can do is work on how we react when our teammates allow themselves to be vulnerable. It can be really, really difficult to admit when we’ve made a mistake. When a teammate presents us with transparency to the point of vulnerability, we should thank them for this gift.

The Transparency-Killer

The primary issue with a focus on assigning blame is that we don’t address systems holistically when we focus on blaming people for failures in systems. Every time we choose to focus on the binary concept of who was right or wrong when faced with a failure or incident, we squander a valuable learning opportunity. In applications, for example, rather than asking questions about the people and technologies comprising the system, we tend to apply shame (via blame) to the human components and technology fixes to the technology components. Treating the humans as if they are separate from the system results in a weaker system. The system remains broken in spite of the technology fixes. That’s not continuous improvement.

A focus on blame erodes our sense of psychological safety, making us less likely to speak up when we discover an issue with the system or an error we’ve made. We ignore symptoms of systemic weakness and instead treat them as unrelated failures. Systemic issues take longer to be addressed (if they are at all). Morale suffers. We default to associating a face to a every incident that occurred—perhaps so we can know where to direct our inevitable emotional responses during times of stress (dumpster fires 🔥). Maybe blame is the path of least resistance. There’s a name next to the commit that broke everything, after all.

TLDR: We should default to interrogating our systems if we wish to move beyond the performance of weaponizing shame and toward addressing systemic issues. We must reject the toxic, shame-based approach if our goal is for systems to be safe, inclusive, and resilient.

➡️ CHALLENGE: the next time you encounter an issue that would have been previously handled by blaming a human, try asking some questions about the entire system instead.

* - See “stereotype threat.”